node-neural-network

Node-neural-network is a javascript neural network library for node.js and the browser, its generalized algorithm is architecture-free, so you can build and train basically any type of first order or even second order neural network architectures. It's based on Synaptic.

This library includes a few built-in architectures like multilayer perceptrons, multilayer long-short term memory networks (LSTM), liquid state machines or Hopfield networks, and a trainer capable of training any given network, which includes built-in training tasks/tests like solving an XOR, completing a Distracted Sequence Recall task or an Embedded Reber Grammar test, so you can easily test and compare the performance of different architectures.

The algorithm implemented by this library has been taken from Derek D. Monner's paper:

A generalized LSTM-like training algorithm for second-order recurrent neural networks

There are references to the equations in that paper commented through the source code.

Introduction

If you have no prior knowledge about Neural Networks, you should start by reading this guide.

Demos

- Solve an XOR

- Discrete Sequence Recall Task

- Learn Image Filters

- Paint an Image

- Self Organizing Map

- Read from Wikipedia

The source code of these demos can be found in this branch.

Getting started

Overview

Installation

In node

You can install lucasBertola/node-neural-network with npm:

npm install node-neural-network --saveIn the browser

Just include the file NodeNeuralNetwork.min.js from /dist directory with a script tag in your HTML:

Usage

var NodeNeuralNetwork = ; // this line is not needed in the browservar Neuron = NodeNeuralNetworkNeuron Layer = NodeNeuralNetworkLayer Network = NodeNeuralNetworkNetwork Trainer = NodeNeuralNetworkTrainer Architect = NodeNeuralNetworkArchitect;Now you can start to create networks, train them, or use built-in networks from the Architect.

Gulp Tasks

- gulp: runs all the tests and builds the minified and unminified bundles into

/dist. - gulp build: builds the bundle:

/dist/NodeNeuralNetwork.js. - gulp min: builds the minified bundle:

/dist/NodeNeuralNetwork.min.js. - gulp debug: builds the bundle

/dist/NodeNeuralNetwork.jswith sourcemaps. - gulp dev: same as

gulp debug, but watches the source files and rebuilds when any change is detected. - gulp test: runs all the tests.

Examples

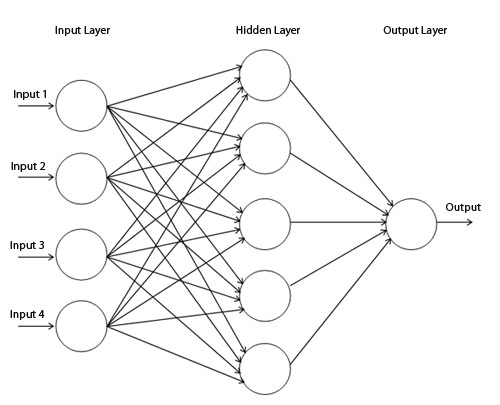

Perceptron

This is how you can create a simple perceptron:

.

.

{ // create the layers var inputLayer = input; var hiddenLayer = hidden; var outputLayer = output; // connect the layers inputLayer; hiddenLayer; // set the layers this;} // extend the prototype chainPerceptronprototype = ;Perceptronprototypeconstructor = Perceptron;Now you can test your new network by creating a trainer and teaching the perceptron to learn an XOR

var myPerceptron = 231;var myTrainer = myPerceptron; myTrainer; // { error: 0.004998819355993572, iterations: 21871, time: 356 } myPerceptron; // 0.0268581547421616myPerceptron; // 0.9829673642853368myPerceptron; // 0.9831714267395621myPerceptron; // 0.02128894618097928Long Short-Term Memory

This is how you can create a simple long short-term memory network with input gate, forget gate, output gate, and peephole connections:

{ // create the layers var inputLayer = input; var inputGate = blocks; var forgetGate = blocks; var memoryCell = blocks; var outputGate = blocks; var outputLayer = output; // connections from input layer var input = inputLayer; inputLayer; inputLayer; inputLayer; // connections from memory cell var output = memoryCell; // self-connection var self = memoryCell; // peepholes memoryCell; memoryCell; memoryCell; // gates inputGate; forgetGate; outputGate; // input to output direct connection inputLayer; // set the layers of the neural network this;} // extend the prototype chainLSTMprototype = ;LSTMprototypeconstructor = LSTM;These are examples for explanatory purposes, the Architect already includes Multilayer Perceptrons and Multilayer LSTM network architectures.

Contribute

node-neural-network is an Open Source project. Anybody in the world is welcome to contribute to the development of the project.

If you want to contribute feel free to send PR's, just make sure to run the default gulp task before submiting it. This way you'll run all the test specs and build the web distribution files.

<3