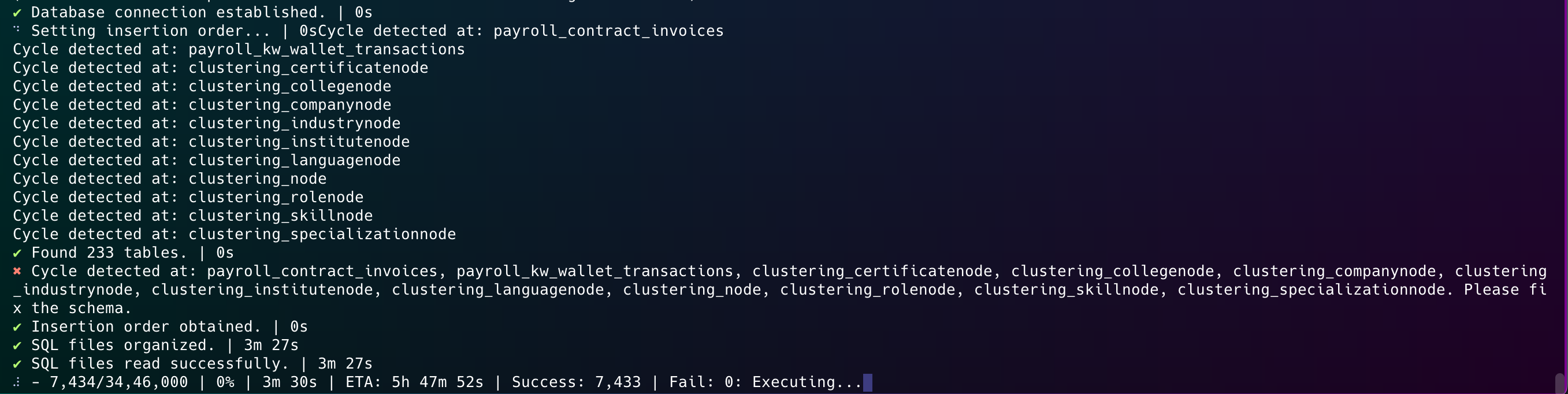

A robust Node.js utility for reliably importing large SQL dumps into PostgreSQL databases with intelligent table ordering and error handling.

Importing large SQL dumps often fails due to:

- Foreign key constraint violations

- Memory limitations with large files

- Lack of proper error handling and recovery

- No visibility into progress or errors

This tool solves these problems by providing intelligent chunking, dependency-based ordering, and detailed execution tracking.

Clone the repository:

git clone git@github.com:meghoshpritam/db-helper.git

cd db-helper

pnpm install- Configure database connection in

.env:

DB_USER=user

DB_PASSWORD=password

DB_HOST=localhost

DB_DATABASE=db

DB_PORT=5432

DB_SCHEMA=public- Run the executor:

# With Prisma schema for table ordering

node lib/sql-file-executer.js path/to/dump.sql -s path/to/schema.prisma

# Without schema

node lib/sql-file-executer.js path/to/dump.sql- Breaks down large SQL files into manageable chunks

- Analyzes table dependencies using Prisma schema

- Executes INSERT statements in correct order

- Detailed progress logging and error tracking

- Automatic cleanup of temporary files

- Continues execution even if individual statements fail

If you find this tool useful, please consider giving it a star on GitHub! Your support helps make the project more visible to others who might benefit from it.

Apache License 2.0